Importing Git History to Elasticsearch

Ever wanted to explore source control changes with Elasticsearch? Below are the steps I took for importing a repository via the bulk API.

Roll up your sleeves! This following steps are not pretty, though they are simple.

Cleaning things up and releasing them in a tidy package would be a great project for somebody!

Below is a messy series of commands and scripts that will gather the git history of a repository and convert it to the bulk import format for Elasticsearch.

0. Grab the scripts from Github

git clone https://github.com/codingblocks/git-to-elasticsearch.git

1. Export git history in json-ish format

Change directory to the repository you want to import, and export the base history. (note you may have to adjust the path of the redirected file in the scripts below)

I found it easier to just do this in two passes and then join the data later in code.

git --no-pager log --name-status > ../git-to-elasticsearch/files.txt

git log --pretty=format:'{%n "commit": "%H",%n "abbreviated_commit": "%h",%n "tree": "%T",%n "abbreviated_tree": "%t",%n "parent": "%P",%n "abbreviated_parent": "%p",%n "refs": "%D",%n "encoding": "%e",%n "subject": "%s",%n "sanitized_subject_line": "%f",%n "body": "%b",%n "commit_notes": "%N",%n "verification_flag": "%G?",%n "signer": "%GS",%n "signer_key": "%GK",%n "author": {%n "name": "%aN",%n "email": "%aE",%n "date": "%aD"%n },%n "commiter": {%n "name": "%cN",%n "email": "%cE",%n "date": "%cD"%n }%n},' | sed "$ s/,$//" | sed ':a;N;$!ba;s/\r\n\([^{]\)/\\n\1/g'| awk 'BEGIN { print("[") } { print($0) } END { print("]") }' > ../git-to-elasticsearch/history.txt

The above script was based off of the work in this gist.

2. Format the data

This will join the history.txt and files.txt files and format them for import to Elasticsearch.

node git2json.js

3. Import to Elasticsearch

This script will upload your file to Elasticsearch:

./import.sh

A copy of the fully formatted finaljson.json file has been generated, which is useful for debugging.

Here's a link to documentation for quickly spinning up an Elastic Stack cluster: https://analytics.codingblocks.net/blog/quickstart-guide-elastic-stack-for-devs/

rm history.txt files.txt finaljson.json

5. Have fun!

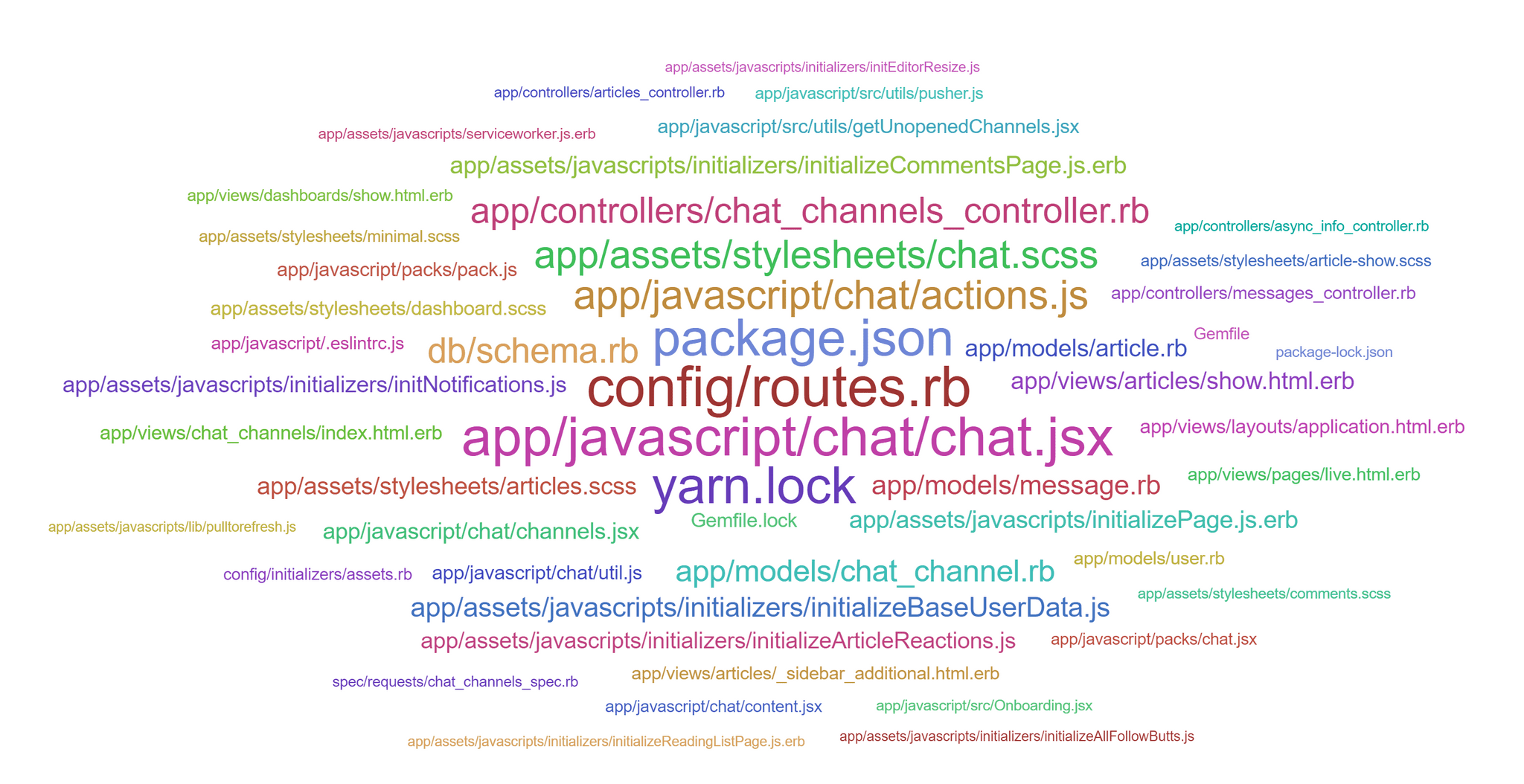

Here are a couple ideas on what you might query now with Elastic. Stay tuned for my next blog post and I'll show you how to answer all of these questions and more about one of my favorite sites: dev.to

- What files are changed the most often?

- Who are the most active authors?

- What time of day is the most committed?

Check out the video showing the results!

Troubleshooting:

Q: Having a hard time redirecting input on WSL?? A: Try pasting the git commands into a .sh file first, and then redirecting that execution to the file

Q: Having trouble reading files on Windows or WSL? A: Try converting the file to ascii

In dos:

cmd /c /a type history.txt>history.txt

cmd /c /a type files.txt>files.txt

Q: Having a hard time with large repositories? A: Elastic will prohibit uploads greater than 100mb by default, you can change that settings but you might be better off splitting the file up (though make sure to do it only after an even line!)